Sunday, September 30, 2018

Longitudinal analysis of the human "Exposome" shows amazing promise and some scary stuff!

This concept is BIG, has far reaching and hard-to-fathom potential consequences, and is something we should all be thinking about.

I'm not qualified to talk about any of that (it may not stop me) but what about the stuff I do kinda understand? First off -- this is the paper (direct link here)!

What is the Exposome? The authors define it here as "....human airborne environmental biotic and abiotic exposures..." so....the stuff in our air that we're getting exposed to, coming from living things or non-living.

Interested? You should be!

Okay -- so they monitor 15 individuals around the world for up to 2 years. They had a "wearable device" of some kind that collected samples of the stuff they're exposed do. They do a ton of genetics stuff on the people and the samples that are collected (I think. this is a Cell paper, it's like 100 pages and I do have a job).

Now the interesting stuff ---> for the exposure stuff, the samples are ran on a UHPLC coupled Exactive using a cool mixed mode column (to presumably separate both polar and nonpolar compounds well) and -- the details are kind of fuzzy in the methods -- but it appears they ran each sample in positive and negative? or with pos/neg switching with 100,000 resolution.

The data was searched with XCMS and someone on this team is an R fanatic (or epidemiologist -- which might be redundant). I've never seen so many individual packages utilized in a single study -- but the genomics and the geographic data are all statistically tied together and ----

We're exposed to TONS of stuff, both from living and non-living sources. And -- geography plays a huge role. And -- there is some clear looking (though mysterious in their actual meaning) links between what you are exposed to and what is going on in your genetics.

Probably not the right response -- but I am certainly definitely completely not qualified to judge. However, this is a really though-provoking paper in a field where our technologies will obviously be able to help!

Friday, September 28, 2018

20-must read papers for proteomics students, courtesy of the Liu lab!

I can't claim any credit for this awesome list that Yangsheng Liu posted on his lab webpage. I don't even know who originally found it!

I will be permanently linking this list over there --> in the Newbies section later!

You can check out this great list here!

Thursday, September 27, 2018

Positional phospho-isomers are a problem -- get a Thesaurus!!

(Image stolen from SadandUseless.com [please don't sue me])!

I've been dying to talk about this one since seeing a talk sometime in the spring about it!

Did you know that phosphorylations are commonly associated with phosphorylations on amino acids right beside them or just a few amino acids away?!? I didn't, but I've asked a bunch of biologists and they said it's true.

This is one of the many cool insights that you could find in this new preprint on THESAURUS!

Before you get too excited -- Thesaurus is for DIA and PRM data. Wait -- You're more excited?!?!

(Groans.....okay...last one, probably....)

Thesaurus is software. You might have guessed that from a couple of the names on the paper. And it -- okay -- figure 1 is awesome and explains it better than I possibly could.

Somebody is good at making flowcharts. The end result of running through that logical circle is going to be a test of whether phosphorylation at E or F is the best match ---

Okay ---- last stolen picture for this post -- but this is the ABSOLUTE COOLEST PART --- what if it is both of them? Because it biologically makes sense that it could be. No -- not phosphoRS doesn't have enough information to discern which one it is and gives you 50/50 so you just report both --- like, biologically it can and does totally happen that you'll get an almost perfectly co-eluting pair of peptides that is both phosphopeptides E and F (obviously not in the example above, but it really does happen a lot (proof in this great paper!)).

But this is the ABSOLUTE COOLEST PART (wait. I said that. I'm excited.) in modern dd-MS2 -- we skip the second one! Almost always! We're so certain of our massively improved peak shapes and the efficiency of our instruments in making an ID on the first fragmentation that most of us use dynamic exclusion to trigger at some (by historical standards) ludicrously low peptide intensity -- and then we exclude peptides of that exact mass from being fragmented for huge amounts of time. So if there are 2 positional isomers eluting at almost the same time -- we don't see it.

Is it possible that our improved methods and instruments is actually decreasing our phosphopeptide ID recovery? Yeah, it totally is.

EDIT: Forgot this part --> In DIA and PRM you are constantly acquiring MS/MS spectra for your mass range in a cycle. So you can see fragmentation patterns of two almost completely co-localizing phosphopeptides and Thesaurus can help you identify them!

I think DIA has kinda been floating around looking for something that it's good at -- or better at than dd-MS2 -- this might actually be that thing.

Thesaurus is shown working in conjunction with Skyline throughout the paper. It can also function as a complete stand-alone and you can get it here.

(They got this wrong, btw....🙉🙉🙉🙉🙉🙉🙉!!!!!)

Wednesday, September 26, 2018

Splitting up TMT kits? Skip every other channel and increase your coverage!

We don't always have 11 samples to run. In cost per reaction though, (at least with my old sales rep -- the new one doesn't seem to appreciate the level of discount I expect 💔😇😇) TMT-11plex is about the cheapest way to go.

If you don't have 11 channels, for example, you have 6 -- you can get a boost in your number of peptide and protein IDs by skipping every other channel.

If I have 6 samples I will pick an N or C variant for each unit mass and stick to it. For example

126

127 N

128 N

129 N

130 N

131 N

And make sure to not mix in any of the C variants from the same unit mass. To fully resolve 129N/C you need 43,225 resolution @ m/z of 200. This means you need to use 50,000 resolution on a Tribrid, QE HF or QE HF-X. Or 70,000 resolution on a QE or QE Plus.

Sooooooo slooooow..... Great data...but...sloooooowww....

If you skip the N/C variants for each unit then your reagent is essentially the TMT6-kit (with shuffling of the N/C)

At 1Da apart, it really doesn't matter that MS/MS resolution you use. I drop it all the way down (30 Hz on the Tribrid!). You aren't going to get 6 times the number of scans -- but unless you are fill-time limited (having trouble hitting that AGC target) you're going to get a lot more MS/MS, peptides, and protein IDs than if you'd mixed your N/C labels!

Worth noting -- if you are using Proteome Discoverer you probably want to create a new quan method for the channels you use -- if you want PD to normalize the data

-- if you aren't normalizing and/or imputing quan you probably don't need to worry about it. You can just hide those channels in the output report. What you don't want is background noise in your quan region (or a reporter M+1 isotope) being mistaken for your quan, it being scaled up as if you loaded only 1% of peptide in channel 128C and some noise being amplified 100x. Probably harmless in the end, but it totally looks weird and worries me that it might actually affect something.

Tuesday, September 25, 2018

Pushing veterinary medicine forward with Proteomics!

I have a tendency to give people a really hard time for animal models. Or Arabidopsis. I understand these things have uses, I'm mostly just annoyed when people use these models unnecessarily -- or deceptively. Veterinary medicine is woefully behind a lot of other sciences. Maybe our technologies can do something about it?

Proteomics to the rescue!

Okay -- so some lucky person on this team got to collect urine from almost 20 sea lions. 11 of them were sick. What has to be more fun than collecting sea lion pee? Collecting it from one that doesn't feel well! Dedication to your craft, FTW!

Discovery based LC-MS was used on a Tribrid and found nearly 3,000 proteins. Which made me almost try to find out how many proteins are normally found in urine. That sounds like a lot, right? And a number of obvious differential protein biomarkers. The others mention in the paper that this bacteria affects a lot of other sea mammals and they decided something with the word LION in it's name was a better place to start than a cuddly otter.

While looking this group up I also stumbled on something else they're working on something ridiculously cool --- the CoMPaRE (excuse capitalization) program.

What can we learn from Comparative Proteomics across mammals? (I don't even know what half those animals even are!) I'm guessing a lot, and I bet that data mining is going to be a lot of fun!

Monday, September 24, 2018

Get even more control over your literature searches with Personal Blocklist!

Maybe everyone else knows about this, but I didn't.

Imagine that you are trying to research a new-to-you disease but you keep getting directed to dumb things you can't actually read. Annoying, right? What can you do about it?

You can install a personal blocklist! It's a Google add-in that puts a little "talk to the hand" thing in the top right corner of your browser.

Now go to one of those sites that takes up all the space in your search history -- and hit the hand button --

Imagine that you are trying to research a new-to-you disease but you keep getting directed to dumb things you can't actually read. Annoying, right? What can you do about it?

You can install a personal blocklist! It's a Google add-in that puts a little "talk to the hand" thing in the top right corner of your browser.

Now go to one of those sites that takes up all the space in your search history -- and hit the hand button --

-- now decide if you ever want to go to that site again! Nope? Hit the blue thing!

Good-bye, forever WebMD!! 20 ads from something that is clearly copy/pasted from the Mayo Clinic web page...?....

Holy cow -- all you have to do is hit the "Sync" button and now it's blocked both of these things on my Ipad and my phone and everything but my work computer!!

Now -- try really hard not to go all trigger happy with all this power!!!

Good people still publish good science in Elfseverer....but that button is pretty tempting....

Sunday, September 23, 2018

Proteome-Wide Structural biology -- Where are we now?

(Figure from Rual et al., Nature 2005)

It probably is no surprise to you that using proteomics for structural biology is growing like crazy right now. It seems like every day another one of our 250 or so researchers sees some data from someone else's DSSO protein crosslinking study we knocked out and is excited to send us samples. At this point I think the queue for our only Fusion is longer than most reasonable estimates for my life expectancy and DSSO might be the main reason.

But protein crosslinking is just one of many structural biology techniques that is benefiting from our massive improvements in instrument speed, resolution, sensitivity -- and, more importantly(maybe?) our access to alter our instrument experiment logic (seriously -- maybe above all else, the reason the Fusion is so powerful -- though MaxQuant Live may offset that somewhat when it launches).

Where does our field stand in 2018? This great update in this month's JPR has all the answers!

It goes into techniques you've probably heard of and maybe forgot years ago when it sounded smart, but the hardware just couldn't pull it off. Maybe it's time to revisit these with what we can do today! (Honestly, it has a bunch I swear I've never heard of at all -- and those are cool too!!)

It probably is no surprise to you that using proteomics for structural biology is growing like crazy right now. It seems like every day another one of our 250 or so researchers sees some data from someone else's DSSO protein crosslinking study we knocked out and is excited to send us samples. At this point I think the queue for our only Fusion is longer than most reasonable estimates for my life expectancy and DSSO might be the main reason.

But protein crosslinking is just one of many structural biology techniques that is benefiting from our massive improvements in instrument speed, resolution, sensitivity -- and, more importantly(maybe?) our access to alter our instrument experiment logic (seriously -- maybe above all else, the reason the Fusion is so powerful -- though MaxQuant Live may offset that somewhat when it launches).

Where does our field stand in 2018? This great update in this month's JPR has all the answers!

It goes into techniques you've probably heard of and maybe forgot years ago when it sounded smart, but the hardware just couldn't pull it off. Maybe it's time to revisit these with what we can do today! (Honestly, it has a bunch I swear I've never heard of at all -- and those are cool too!!)

Friday, September 21, 2018

The modern guide to proteomic statistics!

Okay, on the surface this probably isn't the most exciting topic in the world, but this review is perhaps the best recent example I've seen on the topic of false discovery rates in proteomics.

I'm permanently linking this over there ---> somewhere in the (probably needs updated anyway) section for people new to proteomics ideas.

Next time you're having the FDR conversation with a customer or collaborator -- oh, you have one today? me too! -- maybe think about starting with this amazingly insightful and well-written tutorial.

Definitely on this topic -- this xkcd I'd never seen till today. Shoutout to Ben Neely for the link!

Somewhat related and something that will go in that section over there --> as well -- if you do the Twitter thing -- @BioTweeps posted a really concise and well-written overview of Mass Spectrometry that surprisingly well within the Twitter character limits. You can find it here.

I'm permanently linking this over there ---> somewhere in the (probably needs updated anyway) section for people new to proteomics ideas.

Next time you're having the FDR conversation with a customer or collaborator -- oh, you have one today? me too! -- maybe think about starting with this amazingly insightful and well-written tutorial.

Definitely on this topic -- this xkcd I'd never seen till today. Shoutout to Ben Neely for the link!

Somewhat related and something that will go in that section over there --> as well -- if you do the Twitter thing -- @BioTweeps posted a really concise and well-written overview of Mass Spectrometry that surprisingly well within the Twitter character limits. You can find it here.

Thursday, September 20, 2018

Need some inspiration? REAL CLINICAL PROTEOMICS. This is what we can do today!!

Okay -- cool developments with our toys aside for a second -- this might be one of my all-time favorite papers. If you can look at that picture without getting inspired by how far proteomics has come -- AND WHAT WE MIGHT DO NEXT?? you probably didn't click the right link to end up here.

I think I might have passed by this paper once because I didn't know what a lot of the words were in that title.

To be honest -- I have one criticism of this paper. The title is terrible.

What I would have made the title --

In a clinically relevant time frame we can help diagnose a cancer patient and pick a personalized therapy to kill their tumor and massively increase the chance the patient survives!!

How'd they do it? They did label free proteomics on slides (? pretty sure?) from an excised tumor as well as the surrounding stromal tissue.

They rapid tip digest it (they use the rapid reduction/alkylation together method as well) and they pop 1ug of peptides onto an inhouse 40cm column (probably cost them $5 to make?) on a Q Exactive HF. Yup, just an HF. Like the one that is running brain digests from mice with generalized anxiety disorders or something in your lab right now that you were considering trading in for something more expensive to run mouse brain digest with? Same one!

They use the always free MaxQuant and Perseus for the data processing and downstream analysis/stats.

Why am I fixating on prices? Because the first argument in clinical anything (at least in the US) is "how can we make an absurd profit for our shareholders again this year if the test we charge $8000 for costs more than $1.16 to actually run? Do you think we're running a charity in this hospital?!?!?"

This group just showed us that we could do personalized proteomics to help patients TODAY with an aging benchtop Orbitrap (that will fit neatly into any clinical lab -- have you seen how much smaller colorimetric blood analyzers are now? They're tiny! Boom -- put an HF there). If you consider free software, virtually no cost for reagents (10uL of acetonitrile and some trypsin) and I don't think we're too far off that $1.16 target for the assay cost. We can stop talking about personalized medicine and actually start doing it already!

Monday, September 17, 2018

Ultra-high pressure column loading?

I'm torn on this one, cause this is real footage of me the first, last and only time I ever packed a nanoLC column...

...you know what would make this even better? Throwing in an extra 25,000 PSI? Ummmm.... no...not the best idea for me....

However -- if you have successfully packed a nanoLC column without injuring yourself or others AND can make one that doesn't negatively impact the performance of that $1M instrument you're using...maybe this is a solution that would work for you!

You can find the paper at ACS here!

The MSM blog has also posted a summary of the paper here!

Me...I'll probably keep buying nanoLC columns from vendors who are responsible for doing it safely and correctly and are responsible for the upfront QC on their products....

Saturday, September 15, 2018

Miss the days when PD was slower and less stable? Tips to relive those days!

This has come up a lot over the years and I was surprised to see I couldn't find a case of me rambling about it here! So....I present Ben's guide to run Proteome Discoverer way slower and with lots of weird random chaotic surprises!

Tip 1 (picture above):

Keep all your stuff on separate drives while processing! Bonus points if you keep your RAW files on network drives. Double bonus points if you process your RAW files over your network from one drive and then deposit the results on a different processing drive!! Want to level all the way up? Pull your RAW files from one network storage drive. THEN transmit your processed results to a DIFFERENT network storage drive!

...hours of processing....

Besides the fact that you've went from eSATA data transfer rates (according to Google --6Gbps) to (assuming you have true gigabit ethernet LAN) to a whopping 100 Mbps which is a minimum of 60x slower, you also get to deal with a bunch of cool extra things that are described well in this page.

It totally cracks me up that the physical distance between your network drive and your PC is a tangible factor that can affect your network rate. High traffic on your network doesn't speed things up either (a win for us nocturnal scientists!), but that is often negated by the huge FAIL that the drives tend to do things like perform their backups and security scans at 2am when there is only the one weird guy in the building using them.

Honestly, our files aren't all that big. We just did some deep fractionated proteomes (15 fractions) and they're maybe 24GB per patient. Transferring 6Gbps and 100Mbps a second shouldn't be that big of a change, even if you had 10 of them, right? However, it isn't just one reading step. It's constant R/W steps (have you seen the funny huge ".scratch" file that is generated? while you're running? You are constantly reading that back and forth across the network.

Around the fact that PD is super slow -- you get all sorts of hilarious strange bugs. This week I saw one where PD would claim there was something wrong with the name of the output file that someone was trying to use! Wins all around!

Tip 2: Even on the same PC --- process your data on different drives!

I think I have proof around here somewhere. I think I worked it out to 24x slower if you process the same data all on one drive as opposed to R/W to different drives. I think it's on my old PC....I'll update if i find it, it's striking.

Wait -- side note --- did you know that even HDDs can have markedly different speeds? They totally do! There are drives designed for storage that are much slower than ones meant for working on. I've described my problem with that recently on here I think.

This is from a paper currently in review from our lab, but I think it's cool to use it here out of context ---

The cool part is how our new software makes processing huge proteomics sets much faster while kicking out the same data -- but what is pertinent in this ramble is the two shorter bars. Using the exact same files, huge mult-gig proteogenomic FASTA and software settings, we can drop a processing run from 24 hours to down to 14 or so just by moving everything from a HDD to a faster standard commercial Solid State Drive (SSD). If you aren't processing on these, I'd recommend checking them out again. They are getting cheaper every day. I think we just ordered some 1TB ones for less than $200. Bonus: I've still never had an SSD fail. And I've got 2 HDDs on different boxes that sound like they are popping popcorn (not the best sign ever) that aren't as old as the SSDs sharing space with them.

Can I call this a "guide" if there are only two tips? On the first Saturday in approximately 3 years in Maryland where the sun is shining? Looks like I sure can. I need to put on some brake pads.

TL/DR: PD HATES processing over network drives. Move your data and output files to the same drive when running PD then put them back. Yeah, transferring is a pain, but you'll more than make up for it in processing your data faster and with less random chaos.

Big shoutout to the two great scientists who introduced me to new PD errors this week that inspired this post! I promise I'm not making fun. This really does come up a lot. It's too tempting to use your >100TB network storage rather than move things around, but I think system architecture needs improved before you can do it bug-free.

Friday, September 14, 2018

ANN-Solo -- Use spectral libraries to search for modified spectra?

Another big thanks to @PastelBio for something I would have missed!

Okay -- so what if you took one of the remaining limitations of spectral libraries and threw it out the window? I'm talking about the fact that your library must contain the modification that you're looking for(!?!) -- Then you'd have ANN-Solo! You can read about it at JPR here. An earlier version of the text was released at BiorXiV as well.

Now...I'm unclear how the ANN (Approximate Nearest Neighbor) part of this differs from the NIST Open Search functionality added to MSPepSearch last year. At first it seems interesting that the authors use the NIST library here but don't appear to compare their code to MSPepSearch + HybridSearch. They do use other libraries and since MSPepSearch only utilizes NIST library format, maybe the comparison isn't possible? I would be very interested in seeing a comparison between the two.

Unfortunately, while Open Search has an .Exe that I can run and use, ANN-Solo requires a NumPy Python to work and I'll have to ask for help if I want to try it. Honestly, with results as good as the paper reports -- 100% worth it.

Interested and don't feel like reading on a Friday? You can get the software here!

Thursday, September 13, 2018

Mislabeled Data Challenge starts September 24th!

Yo! Bioinformatics peeps! Want in on the coolest challenge you've ever heard of?

Check this out!

Can you get clinical data and proteomic data (and RNA-Seq data, but who cares about that?) from "patient samples" that have been deliberately mixed up and blinded and then sort them back out?

I'm not 100% sure our group qualifies to compete, but we entered anyway. Fingers crossed, we REALLY want in on this after work/weekend project.

I would like to unofficially increase the level of this challenge. Forget the RNA-Seq data. We can do this with proteomics alone!

If we get in we aren't even gonna download the RNA-Seq. The signal is there in the proteomics data. We just need smarter ways to pull it out for comparison.

Time to shift the paradigm! It's FINALLY the age of the proteome and this is a test case where we can prove it.

You can read more about this challenge in Nature Medicine here.

You can directly sign up here.

Be warned, I'm already planning an award ceremony for when someone pulls this off without looking at the nucleotide data.

My proposal -- we should have an award ceremony for ourselves at ASMS or HUPO next year. I also propose it features the great Dr. Jurgen Cox coming in and kicking over a stool that has a Mi-Seq on it. Come on, tell me you had trouble visualizing that happening when you read it!

Edit:

{Edited to remove some statements regarding RNA-Seq and shoes}

Wednesday, September 12, 2018

Rapid assessment of non-protein contaminants with Skyline!

Hopefully if you're doing proteomics you're always throwing in some great FASTA entries from cRAP or the MaxQuant contaminant database or have even generated your own list of stuff that you find in every water blank (or a combination of all 3).

Have you ever seen a way to keep track of the junk in your sample that isn't from sheep wool or gorilla keratin peptides?

Me either! Here you go!

Loads of reasons to read this paper.

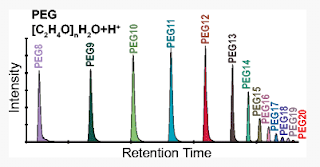

1) PEG is in just about every sample in some way. It's only when there is tons of it that it's a serious problem. This can help you keep track of this!

2) PEG is the first thing you might think of, but there are other contaminants as well. And this method doesn't just work for proteomics. It'll work for any LC-MS experiment.

3) The author totally pulls off a full (and awesome) application note as the single author. It's a great precedent for people with a bunch of stuff on their desktop that they felt funny about writing alone. Writing "I" a lot in a paper feels really weird while you're doing it, just because you're so used to reading "we". It doesn't come off as weird when you read someone else who wrote it that way.

4) In Excel you can =MROUND([Cell],5) to round to the nearest 5. Which no person ever in the history of the world has ever needed. You're welcome.

5)

Tuesday, September 11, 2018

Probing the sensitivity of the Fusion Lumos system!

This new paper at JPR sets up a terrific standard method for determining the sensitivity of an LC-MS system.

The NIST reference antibody was digested and spiked at different levels into a universal concentration of a standard yeast digest. The Lumos was operated in different ways to determine relative sensitivity by picking up the mAB digest at different spike levels.

The most interesting comparisons are probably when the ion trap and Orbitrap are compared and when the Lumos is compared head-to-head with a Q Exactive Plus instrument.

While the Lumos comes out ahead in every comparison, it's only when the ion trap is involved that the gap between the two instruments becomes something you couldn't overcome with some optimization and gradient lengthening -- the gap is just too large.

There are a lot of gems in this study that help guide for instrument selection and method optimization on this great platform.

Monday, September 10, 2018

Time to justify that Virtual Reality gaming rig you've been thinking about!

Sometimes we need to ride some coattails to move science forward. Case in point?

First of all -- there is an entire journal called "Computer-Aided Molecular Design" !?!?

Second --- you might also be mostly aware that VR headsets are out there from videos of how stupid people look while playing games with them....

Okay -- but what if you could take one of these things and with shockingly little code, that is freely available here, use these things to immerse yourself in protein structures from the immense PDB databases all those weird structural people are already uploading?

The better the PBD structure present (newer ones tend to have way more snapshots of the protein from different angles) the better this all works, but if you can't seem to sort out those protein interactions, maybe a visual/pseudo kinesthetic approach will help you get that breakthrough!

Wednesday, September 5, 2018

Deep diving in spinal fluid proteomics!

Human body fluids have unbelievable dynamic ranges in terms of protein abundance. Spinal fluid is no different. Which is surprising, honestly, if you look at it, because it just looks like water.

(Not my freakishly large hands).

Just like the blood/serum/plasma proteome, we don't know with 100% certainty what proteins are present in the fluid under normal conditions, but this brand new JPR study does the best job yet of thorough characterization.

Cool stuff from this study -- there are just companies where you can buy commercial human body fluids from! They just bought a bunch of CSF!

They digest the CSF, TMT6-plex and then they break out the OFF-GEL and use the high resolution fractionator (24 isoelectric peptide fractions).

It looks like they take the peptides directly from the OFF-GEL and desalt online (! awesome if true !) and run a complex 171.354 minute gradient (my math) on a 50cm column into an Orbitrap Fusion Lumos running in OT-OT mode (120k MS1 15k MS/MS).

That's 68 hours of Lumos time and the highest number of peptides and proteins from CSF to-date, by a large margin! Now that there is an improved baseline for "normal" is it time to re-evaluate some of these historic datasets from studies on different pathologies? I'd think so!

All RAW files from this great new reference dataset are available at PRIDE/ProteomeXchange here.

Tuesday, September 4, 2018

Deep Learning in Multi-Omics!

If Google can track the flu progression across a country (incorrectly) with deep learning AI approach stuff, surely the incorrect interpretation of hidden mass patterns can't be that much harder, right?

This cool open access review takes a look at what challenges we currently face, and what tools are already out there!

Monday, September 3, 2018

Orchestrating the proteome!

This is a beautiful little open access review on the proteome PTM-scape with awesomely pertinent references.

100% recommended, particularly when that "why don't we just do transcriptomics" discussion comes up!

Subscribe to:

Comments (Atom)