Thursday, January 29, 2015

Download links are going to be temporarily down

All of the links to the right side of the screen; including the Orbitrap Methods database and apps will need to go down temporarily. Sorry if this is inconvenient. I hope to have new links up by the weekend.

PROMIS-Quan! 3,000 plasma proteins quantified in a single run?!?!

I stole that pic cause it sums it up. PROMIS-Quan is short for: – PROteomics of MIcroparticles with Super-SILAC Quantification. And if that doesn't suggest the difficulty of setting up this study, nothing will.

The plasma proteomics is kind of broken up into two steps, the normal stuff, and then plasma microparticles (which are really freaking cool in their own right...just ridiculously low abundance).

If I have this all straight in my head, this is the general idea. The SILAC supermix is going to have representative proteins from just about protein/peptide humans can produce. We can put in as much of that stuff as we want, including levels that will be easily fragmented and identified. To get around the insane difference in dynamic range of proteins in plasma, we don't actually go after fragmenting the same peptides/proteins in the plasma. We know they are there because of the SILAC pairing effect.

Nice, right? Some people set their instruments so that when they do SILAC they only fragment either the heavy or the light pair anyway. Since the SILAC pairs are clear as day in high res, you don't need to waste time fragmenting both the heavy and the light. This study exploits this fact to give us a completely unprecedented level of plasma peptide coverage.

Now, if I could find one fault with this study it is this: That it would be hard for me to replicate these results myself because my SuperSILAC mix would be different than the one they used. Now, if some enterprising soul would make up, I don't know, 10,000 L of SILAC cells and we could all use the same supermix for the next 5 years or something, we'd be in business.

If you are doing plasma proteomics, thinking about it, or did it for years and got nothing out of it, I strongly suggest you check this paper out.

Wednesday, January 28, 2015

Another try at combining results from multiple iTRAQ experiments

Throughout the history of this blog, one thing is almost always guaranteed to get me some reader feedback. And this is when I get really excited about a way to combine results from multiple reporter ion studies.

When I was at the NIH we would do this experiment: Tissue or plasma from 4 patients with severe malaria of some kind, iTRAQ (now TMT and 10plex) labeled and mixed with similar labeled peptides from patients with some sort of less severe malaria sysmptoms. The awesome scientist who took over my position when I left has continued a lot of this work. The end result? Hundreds, maybe thousands, of files from studies that are interesting in their own right, but can't be linked together to maybe form a bigger picture. There are LOTS of labs in this position. Hence the interest in being able to link multiple iTRAQ/TMT studies together.

Problem is, this stuff is complicated. I've seen talks where people always use 1 channel as the same control or that have spiked in standards, but it seems like a good statistician can always punch holes in the observations that end up coming out -- even if the results look good. We are tossing around lots of variables and to link these things you need some high end statistics that, quite honestly, I wouldn't understand anyway.

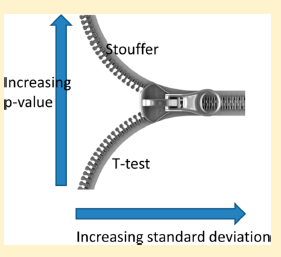

So, here we go again! This paper in this month's JPR from D. Pascovici et al., describes the use of the Stouffer z-score to combining results from multiple iTRAQ studies. The algorithm appears to be derived from robust statistics formerly used in meta-analysis of microarray data. The results look impressive and the algorithm is applied to two separate biological systems, one in plants and one in a rodent brain system. The plant system is really cool cause they actually get a visible phenotype from the plant.

Yes, I'm over my head. There is lots of math symbols in this paper that I do not recognize that hinder my ability to be critical here. But that's for people with different training. In the end if you show me (and some reviewers who are obviously more qualified) that your system gives you results that you can match to a phenotype, I'm gonna want to try it out. And I'm gonna tell lots of people about it.

I'd love to hear what you guys think of this!

Monday, January 26, 2015

Do you think your label free mass spec quan algorithm is the best? Want to prove it??!?!

Every few months I tell myself I need to pay better attention to what those smart people in ABRF are doing. Unfortunately, it tends to slip down my

Point in case: This awesome analysis ABRF is doing right now! The study is called "Differential Abundance Analysis in Label Free Quantitative Proteomics". I should probably stop to read the whole thing but I won't have time until

If you are interested you'll probably want to download these now, cause we know how long an analysis of this type tends to take!

Shout out to Brett Phinney for sending me an email tipping me off to this pending deadline!

Sunday, January 25, 2015

Monday, January 19, 2015

The effects of different buffers on deamidation in tryptic digestion

Deamidation is a polarizing issue. Some people throw it into their searches, other people don't. Dave Muddiman has a paper out there that shows that, a lot of times, what appears to be deamidation may simply be a search artifact. But there is a paper out there that I won't direct you to, that uses 1Da shifts on instruments that are not accurate mass to determine the presence or absence of glycosylation sites and it went into a really nice journal.

Piliang Hao et. al., took a real close look at deamidation of peptides treated with a number of various buffer conditions. To keep things simple, they start with a synthetic peptide, then they go up orders of magnitude in complexity and check out some iTRAQ labeled peptides from rat kidneys.

For the MS/MS analysis, they use a Q Exactive. The data processing was performed with custom Perl scripts, MaxQuant, and I think they used Mascot as well. In case you were wondering, deamidation was searched as a dynamic modification.

What did they find out? Its summed up pretty well in the charge above. I feel like I had a rant recently about how we need to start standardizing our sample prep methods, but it seems hazy now...

This is a nice short study that you can easily finish over a cup of coffee.

Sunday, January 18, 2015

Whoa! Have you seen the cool MCP cover?!?!?

Now, I don't have access (yet...library is closed...) for this full length article, but its cool enough that I'm going to direct you to it anyway.

In the study from Pernille Foged Jensen et. al., they work out some of the protein-protein interactions of intact IGg and neonatal Fc receptor by using hydrogen-deuterium exchange! Seriously. We are at a point in this field where we can see single neutron shifts in protein complexes!?!?!

They go even further and then map out these interactions by using ETD. to determine where the deuterium is incorporated. I seriously can't wait to read this.

You can find the abstract (and if you're lucky enough to belong to someplace that subscribes to MCP) the full text here.

Update: 1/19/15. Got the paper. My interpretation of the figure in the abstract is definitely off a bit. They did not rely on single Da shifts in these complexes. They use measurement of relative deuterium uptake as one measurement and use intact MS1 as a separate series of measurements. It is a very nice paper combining a great set of techniques, but I think that is an important distinction here.

Saturday, January 17, 2015

DigestIF - A QC standard for tryptic digestion!

This falls under the: Why the heck didn't I think of this!?!?!? category.

One of the reasons the genomics people sometimes get to eat our lunch during grant competitions is their reproducibility. It's high. Crazy high. From lab to lab and from sample to sample. A big reason for this is the fact that every person using a certain sequencer uses the reagent kit provided by the manufacturer. They use that kit and follow the directions to the letter. People that make variations to those instructions are treated as outcasts and forced to fend for themselves in the desert alone.

I probably exaggerated a little. But it seems that way. In our field, however, no one preps their samples the same way. Sometime in the next 4-6 weeks I will be visiting my 100th proteomics lab. And I can tell you for certain that no one preps their samples the same way. Even in the same lab you'll find one guy who preps with FASP and another person who only does liquid digestion. And even if two people are using FASP, you'll find different concentrations of ammonium bicarb or iodoacetamide between people. We've been used to tinkering with things. We find better methods that way. But when you're up for a clinical grant and the money goes to the guy with the hi-Seq and not you, this has a lot to do with it.

<End pre-coffee grumpy tirade>

So, what is this DigestIF? Its a QC standard for determining the efficiency of your tryptic digestion! Its a synthetic protein that gives off peptides of varying hydrophobicities when digested. So you have not only a read out of your digestion efficiency, but you have a way of troubleshooting your LC and MS once the peptides are produced.

A LOTof work went into designing the protein to make it realistic, with regions that are easy for trypsin to get to and also regions that are difficult to digest. Lets go back to the first sentence in the blog. Okay...maybe I would have thought of having a digestion control, but I wouldn't have thought to (or had the necessary knowledge to...) design a protein in that way.

So this probably falls under the: Why the heck didn't I think of this idea and then tell someone with the skills to pull this off?!?! Fortunately, I didn't have to. These guys came up with this and its brilliant!

Eventually, I think we'll see a lot of standardization in our field, particularly for when proteomics really takes off in the clinic. Until then maybe we can use something like this and be able to control to make sure we're at least all getting the same digestion efficiency. Even better, maybe this is the control we use in the future that will help us get to the best standardized method.

I think everyone should read this paper from Dorothee Lebert et al.,. There is some guy named Aebersold listed as an author on it as well.

TL/DR: You can spike this protein into your lysate and it will make an amazing QC standard for your whole system, including digestion. Ben needs to get this puppy off his lap and get some coffee...

Friday, January 16, 2015

Peptide Atlas access through the Genome Browser

It is becoming increasingly apparent that if we want to solve more subtle biological problems (cancer?) we're going to need to pool resources with the genomics people. The problem, a lot of the time, is that we don't speak the same language.

Smart people are working on it all the time, though! Proof?

The UCSC Genome Browser now houses a recent build of the Peptide Atlas!

BTW, if you haven't checked on the progress of the Peptide Atlas in a while, you might like to know that the team hasn't been slacking off. They are up to over 420 MILLION ms/ms spectra and have data on over 15,000 proteins, all of which can be downloaded in FASTA format.

You can check out the Peptide Atlas in Genome Brower here!

And the Peptide Atlas home here!

Shoutout to Alexis for forwarding me this story from UCSC.

Thursday, January 15, 2015

Worried about tight search tolerances and FDR?

Sequest and Mascot popped up when I was in high school. They've been improved and tweaked and they are still the industry standard -- and for good reason. However, the core algorithms were designed for a completely different generation of instruments. I don't mean to disparage these algorithms but we may need to exercise caution when processing data and interpreting results obtained on today's instruments.

There has been some argument on how best to set up the processing of data when using target-decoy based approaches. I'm going to simplify them to a ridiculous level into two camps.

1) The pre-filterers: In this assumption, we say that "my instrument is extremely accurate, so I should only allow Sequest to look at data that tightly matches my MS1 and MS2 tolerances." For example, you would set your MS1 tolerance to 5ppm and your MS/MS tolerance to 0.02 Da. This way you only know that good data is going into Sequest.

The detractors from this method might say that if you are doing FDR after Sequest and all of your data is good, the FDR will be too strict and will throw out good data. Some people would argue that FDR works better the more bad data that it gets.

2) The post-filterers: In this method you ignore the high resolution accurate mass capabilities of your instrument until the end. You allow your data to be searched with a high threshold. You get lots of bad data out of your run but then your target decoy search has tons of bad data to throw out and train itself on, making your final results better. This allows the algorithms to work on the statistical magic on which they were originally developed.

Well, Ben, what's the best way to do it, then?

In this paper from Elena Bonzon-Kulichenko et. al., (which, btw, was the paper I found and lost and was raving about a few days ago) they take this issue apart systematically using both Sequest and Mascot. After being ruthlessly systematic, they find that number 2 is the way to go, with this important caveat: after running the data this way you should still use your mass accuracy as a filter. In other words, line up your peptides so that you see the MS1 (and or MS/MS, thought that is much harder) experimental and theoretical masses and throw out the data that doesn't match closely (or use it as a metric to understand how well your search engine-target decoy pipeline worked). The downside is that its a whole lot more data intensive to search a wider mass range.

It is an interesting topic that has been visited a lot (the title of this paper starts with "Revisiting"), but this analysis is pretty convincing. I'm definitely interested to hear what y'all think!

In the end, I'm on the old school side of things. FDR, of any kind, is just a shortcut so that you don't have to manually verify so many MS/MS peptide spectra. I assess a pipeline with an FDR calculator in this way: the better the pipeline, the fewer MS/MS spectra I need to look at. I still haven't seen a single one that I would trust enough to send a dataset to a journal without looking through a few hundred MS/MS spectra first, but that day is coming!

Wednesday, January 14, 2015

Lets meta-analyze some historic histone data sets!

This paper out of Benjamin Garcia's lab is the reason we need good accessible data repositories. In the paper from Simone Sidoli et. al., this group looks at the consequences of some derivatization techniques used in histone analysis. Turns out that some of them create unique post-translational modifications. If you didn't know to look for them, you might be missing a ton of your MS/MS assignments.

Now that we do know about them, however, we can go back through datasets generated using these compounds and maybe find cool new stuff!

Thanks @PastelBio for another great read.

Tuesday, January 13, 2015

Not getting enough phosphopeptide IDs? Try stepping your collision energy

I stole the above figure from this not-quite-new-but-new-enough-for-this-silly-blog paper from JK Diedrich et al., out of John Yates's lab.

In this study they looked at the utility of the stepped collision energy function on my favorite instrument, the great Q Exactive. The paper is open access and definitely worth a read. I see the small molecule people making use of the stepped NCE function, but rarely us proteomics people.

On the QE, when you employ the step function, your AGC target is divided into 3 parts. The first 1/3 of your target is isolated through the quadrupole, fragmented and stored in the HCD cell at the lowest collision energy. The next 1/3 of your target is gathered at the central collision energy and the final part is collected at the highest collision energy on your step. All of these fragments are deposited back into the C-trap and go in for one single Orbitrap scan

If you have older QE software, your fragmentation energies must be symmetrical (you set the central, and then a percentage above and below of the central normalized collision energy). On the newer builds (QE 2.3 and up) you can set all 3 energies to whatever you want.

I've used this function when doing top-down and when looking at glycopeptides, but this paper shows clear utility for this function when doing phosphopeptide analysis and also for liberating TMT reporter ions from peptides. On the histogram above, the x-axis is the a-score of the phosphopeptides. The sum column shows that they found hundreds of new phosphopeptides by simply checking the stepped NCE box and re-running.

It may be worth taking a second look at on your less ordinary samples!

Monday, January 12, 2015

Interested in R? Another cool free set of courses are coming up.

R is a super powerful tool for all sorts of biology. Thanks to some great proteomics researchers there are tons of great tools out there in the R-verse for us. Problem is, you have to learn how to use R first.

I mentioned a while back that I'm working on my certificate (slowly...) in R through a program at Johns Hopkins.

Another program is starting in a couple of days through some place called Harvard? You can find more information about it here.

Shoutout to @pitman_mark for leading me to this. If you're interested you need to sign up soon!

Sunday, January 11, 2015

Proteomic investigation of the effects of methamphetamine

Methamphetamine (METH) was the subject of the amazing AMC series, Breaking Bad. It is an awful compound that has been growing in use, particularly in rural areas. It is a big problem around my home town and seems to be worse every time I go there.

We probably all have a feel for how it works. Like most stimulants, METH looks a little bit, structurally, like one of our natural neuro- happy compounds. It binds there instead of the natural compound and induces euphoria or something. Since these receptors are occupied, your body down-regulates production of the natural happy chemicals and when you are out of METH you're sad and lethargic and probably addicted. There are dozens of compounds over the last century that have worked this way, and most of them are illegal, but METH seems more sinister (Google "before and after" meth photos...or don't, its pretty gross).

Travis Wearne et al., out of Macquarie University decided to take a proteomic look at what is going on in the mammalian brain after chronic METH exposure (described in this paper in JPR). They repeatedly exposed mice to METH, took out their brains and compared them to control mice. I hope I'm not the only one who is super curious as to the behavior of mice on METH (bouncing off cages? trying to steal ATMs?). They set up a method to measure this by putting motion sensors in the cages. Unfortunately, they don't really go into these results that I can find.

For the comparative proteomic analysis, they use an LTQ and spectral counting and the Scrappy analysis program to determine up and down- regulation.

End results? Tons of messed up brain proteins. When I pulled this paper up, I seriously assumed it was going to be a phospho- analysis, which I would associate with short term effects. You are actually changing the regulation of protein levels with this drug? Holy cow. Even worse? When they look at the proteins that are differentially regulated via Ingenuity Pathway Analysis, they have a huge overlap with genes that we know are messed up in people with schizophrenia...

This is a nice little study that really pounds home how messed up this drug is.

Saturday, January 10, 2015

Proteomic analysis of hairdresser mucus.

This has to be an entry. Its a super-unique study and I was able to find the two images I've plugged in here to go along with it.

Background: Hairdressers have increased risk of having problems with their airways. It is thought that the reason for this has something to do with the nasty hair bleaching compounds, but not much else is known about it. Sounds like a job for Proteomics!

Experimental setup: A nasal mist solution was created that featured the nasty hair bleach compounds. Hairdressers diagnosed with sinus problems linked to these compounds and people without these problems were exposed to the nasal mist. Both previous to and post- nasal spray, the (hopefully well compensated) individuals were subject to nasal lavage (also known as irrigation, which seems like a less pleasant term...). The resulting nasal output was filtered and digested in the way we'd digest every other protein source.

The resulting peptides were studied by SRM, looking for 200-300 peptide species. I'm a little unclear from the paper why these targets were chosen. I assumed we'd be looking at a global discovery quan experiment, but it is pretty clear these investigators know a lot more about nasal irrigation than I do.

The results of the study indicate both differential expression of some proteins involved in epithelial barriers, as well as significant levels of oxidation of many proteins studied (which they must have known about before or they wouldn't have been able to set up their SRM transitions).

All in all, an interesting system. Due to the small sample size (humans) several levels of statistical analysis were used to determine significance of observations and that is a real shining point in this paper.

The paper is by Neserin Ali and you can find it in its ASAP format at JPR here.

Nice overview of the Human Protein Atlas

I missed this earlier in 2014. Nature did a nice overview of the Human Protein Atlas and its history around May. It makes for a nice short read if your brain is having trouble firing up this morning.

You can find it here.

And here is the Human Protein Atlas itself.

Friday, January 9, 2015

All Proteomics Conferences of 2015

Speaking of Pastel BioSciences, here is a great new resource they've put together. Every Proteomics conference of 2015? Hopefully I'll see a bunch of you guys at some of these (sadly, I can't go to all of them....)

Thursday, January 8, 2015

PROTEOFORMER!

Click on the picture above to expand it. Its awesome.

Do you know about the "next gen" sequencing method called Ribo-Seq? Me neither. Thanks to the magic that is Wikipedia, I now know a little bit about it. Apparently it figures out the messenger RNAs that are present at a given point in time. With that information we get a snapshot of what the cell is saying to translate. Cool, right? Sound hoaxy? Sure! I'll ask a genomics expert about it later.

But genomics labs are generating this data. The first paper Wikipedia cites is from 2009, so that info is out there.

This is where PROTEOFORMER comes in. This pipeline takes that information and translates it into the protein sequence information. Then you can search that information with a variety of tools available through Peptide Shaker and SearchGUI. If this Ribo-Seq thing says its present and the MS/MS confirms it, I'd say that Ribo-Seq thing isn't a bad idea!

You can check out PROTEOFORMER at its website here.

You can read the original paper from J. Crappe et. al., here.

Thanks go to Pastel BioSciences for leading me to this great resource!

Wednesday, January 7, 2015

ATM and ATR in Arabidopsis

In grad school we had a series of guest lectures every Friday. In order to get the 1 credit for attending you could miss no more than 2 lectures per semester. I planned mine out so that I didn't have to hear anything about Arabidopsis. Yes, I know it is an important model organism. And yes, I know GMO crops are necessary to the continued habitation and population growth of this planet...I just always found plant research sooooo boooorrrrrriiiiinnngggg.

Now, this is weird, cause I've got like 10 papers open on my desktop here and I'm going to tell you about the Arabidopsis one. Why? Cause this study is so good it makes this ugly little plant interesting! The paper is from Elisabeth Roitinger and Manuel Hofer, et. al., and is a study on quantitative phosphoproteomics of ATM and ATR knockouts in this plant. (In press and open access, at least for now, at MCP here.)

If you aren't familiar with ATM/ATR, you've definitely never done cancer research. ATM and ATR are massive central regulators of DNA repair. If something happens to your DNA where it needs repaired, one or both of these guys is going to start a phospho- chain reaction that will make the damaged cells stop dividing and initiate proteins to do DNA repair. If you knock out either of these genes (some people actually live...though not for long...with mutations in these genes) it almost always means your mouse gets cancer and dies real young.

See why this is interesting? What the heck are these proteins doing in plants?!?!? Turns out, the same exact stuff!

This group made ATM and ATR knock out (or knock-down...I'm not going to study the genetics part) Arabidopsis plants and then FASP digested out the protein. There were surprisingly few extra steps involved, getting protein out of plant tissue is tough, but the protocol provided here is one of the most straight-forward ones I've seen and the one I'll follow in the future. Next, they iTRAQ labeled their WT and mutant strains and phosphopeptide enriched with titanium dioxide and IMAC. The enriched samples were SCX fractionated and the resulting fractions were analyzed on an Orbitrap Velos.

The Orbitrap method is interesting. Every precursor was fragmented once with CID and once with HCD. Which is better for phosphopeptides? CID or HCD? Who cares! On a hybrid instrument you can do both, so why not! Is ETD better for phosphopeptides? Sure. But CID+HCD will, under most conditions, still be faster, and 2 scans may be almost as good, and faster -- which should result in more total PSMs.

How'd they do with this method? 15,000+ total quantifiable phosphorylation sites. Boom.

By the way, I mentioned above that if you induce DNA breaks that's when ATM and ATR are kicked into play. They tested this model system by irradiating these knockout plants. This study showed differential regulation of the knockouts with radiation over the wild-type plants with radiation that closely mirrored those that we know occur in humans and mice.

In the end? A really nice phosphoproteomic study AND a great new model system for cancer researchers who don't mind working with plants...

...which may not be so bad, after all.

Monday, January 5, 2015

New study shows MudPit still has some big advantages!

Among the many things I've learned today? There is television show called MudPit. It appears to feature a mixture of live action and computer animated characters. Out of curiosity I watched 20 or 42 seconds of it. Worse things have happened to me. Off hand, I can't come up with many of them...

That aside, MudPit in Proteomics is an awesome thing. Online 2D fractionation that caused the coverage on our proteomics experiments to just sky-rocket, even on our older and slower ion trap systems. Recently, however, MudPit has fell out of favor with a lot of groups. For one reason, its kind of hard to set up. For another, you end up with an absolute ton of RAW data at the end and some programs are going to have trouble with it (Percolator, for example, in its 32-bit iteration can have trouble with that much RAW data at once).

Some labs still stick to it, and with good reason. Look at dozens of great papers that roll out of Mike Washburn's group at Stowers from the fleet of LTQs they have running MudPit around the clock. I've been lucky enough to spend some time there. The way they run it is really clever. Each sample is 24 hours so you can load the next one when you come in the next day. I'd argue that there has been as many pure biology papers roll out of that room full of LTQs as there has out of any other room full of instruments in the world.

So here is the question: If you have an ultra-modern nanoLCMS system, is there anything to gain from MudPit? Considering the amazing level of coverage that we see out of cell lysates in 1D separations these days, my initial guess would be no. I would have guessed that we only really gain a lot with MudPit when the instruments are slower or not as sensitive.

A recent paper from Aimee Rinas & Lisa Jones (IUPUI Lisa, not Fred Hutch Lisa) shows where this technology can help get low level peptides modifications that may not be detectable with one-dimensional separation alone.

In this paper (abstract here), they look at fast photochemical oxidation of proteins (F-POP) in a complex lysate on a Q Exactive. Even my favorite mass spec of all time has limitations. The F-POP modifications happen at exceedingly low stoichiometry and are tough to detect in a whole cell digest. By switching over to MudPit they an overall increase of 37% more modifications over 1D separation alone.

They go on to show that this relationship extends to less complex samples, by performing the same comparison on some huge protein complexes.

Can we learn stuff from going back to older methods? Absolutely, of course, ever time. It all depends on the experiment you need to do. If your freezer has a backlog of 2,000 samples in it, the added time and complexity for MudPit may not be worth it for you. But if you really need to get down in the grass for one super important sample, this technique is something you can keep in the toolkit. Of course you'll need to have 2 pumps/mixers on your LC to pull it off, but a lot of LCs are equipped in this way.

And please don't watch the Mudpit show...

Saturday, January 3, 2015

Fake peer review?

Man, I'm not entirely sure where this article originally came from but I left this depressing tab open on my desktop for the last couple of days before finally reading it and the related links in the article. People have been successfully been faking peer review...and some of them are getting caught.

It is an interesting read, definitely wondering why I didn't think of it. (kidding...)

Thursday, January 1, 2015

My favorite proteomics stuff of 2014

I was reading an opinion piece I found on Reddit this morning that said there were far too many "Best of 2014" articles out there. Which reminded me that I hadn't written mine yet!

In case you care, here are my favorite Proteomics News stories of the year!!! Man, there is soooo much...this may need to just be a small portion.

I'm going to call 2014 the year of quality control in proteomics! The Pierce HeLa digest was released and so many people are using the PRTC standard or similar things to keep accurate metrics on their samples. We also saw new QC programs such as qcML, QuaMeter and nodes for Skyline.

2014 also saw the release of my all time favorite free de novo sequencing software the DeNovoGUI. This has been an incredible year for software in general: ptmRS? Byonic in PD? MS-Viewer? Peptide Shaker? Proteo Suite? Just a ton of cool stuff.

This is the year we really started to see people leverage the power of comparing RNASeq variant data to shotgun proteomics data. (Another paper: proteogenomics of cancer!) It still isn't trivial, by any means, but some great new tools are on the way (particularly some streamlined stuff out of Minnesota..more on this awesome stuff later!)

Of course, the big news was this stuff:

Sure, it may be a bit controversial, but after ruminating on this for 7 months or so, this is my opinion: this was a really good thing for us. The genomics people have been able to say for years "we can see every gene." Think about where "proteomics" was when the term was coined. We could load up gel slices on an LCQ and if we came back with 300 proteins that was a big freaking paper. People outside our field who jumped on the proteomics bandwagon 10 or 15 years ago and were turned off may not have ever stopped to see the light years we have come in that time. This cover says in bold terms: we're here and if you give us the resources we can beat the genome sequencers. Hands down.

Instrumentation, wise, this was another great year: QE HF?!?! The mass spec we all knew was coming, but is more awesome than any of us guessed?!? w00h000! The small molecule crowd also ended up with their own version of the Q Exactive (the focus!) but that awesomely cheap box isn't something that we'll be using. Kind of on the instrument side of things, someone finally started building computers for Proteomics Data Processing. I know I probably go on about this too much, but once you've searched a whole cell digest file in 2 minutes for the first time you feel pretty dumb for ever waiting days...or weeks...for a bunch of fractions to finish up!

To wrap this up, I'm gonna revisit my favorite papers of 2014.

XMan: A cancer database with every currently known cancer mutation. Yes, I'm biased, as it came out of my mentor's lab. It's one of those papers, though, where you're thinking "why didn't I come up with this?"

Labeled and unlabeled intact protein quantification. Come on, we all want to get there. Top down is the future, its just too freaking hard still, but here we see the use of available tools that remove one of the limitations. Top down can be quantitative!

The MSAmanda paper came out in June! We need to get off this addiction we have to these search engines from the 90s. Yes, they're good. But new algorithms are going to be necessary to really dig into all of this data we're generating.

My early pick for my favorite paper of the year still makes this list: The Proteomics Ruler!

Its really tough, there is so much good stuff out there this year. I try really really hard but I know I didn't read 10% of what is out there. I probably didn't read 10% of this year's abstracts. The paper I'm going to refer to the most in 2015 is probably this one from Max Planck. Systematically step by step how to fully optimize a QE and a QE HF? I have a day job and I'll be carrying a copy of this paper to work with me every day.

To wrap up this long winded ramble: 2014 was a great year for us, and I can't wait to read about what you guys are working on now!

Subscribe to:

Comments (Atom)

_logo.png)