Wednesday, January 28, 2015

Another try at combining results from multiple iTRAQ experiments

Throughout the history of this blog, one thing is almost always guaranteed to get me some reader feedback. And this is when I get really excited about a way to combine results from multiple reporter ion studies.

When I was at the NIH we would do this experiment: Tissue or plasma from 4 patients with severe malaria of some kind, iTRAQ (now TMT and 10plex) labeled and mixed with similar labeled peptides from patients with some sort of less severe malaria sysmptoms. The awesome scientist who took over my position when I left has continued a lot of this work. The end result? Hundreds, maybe thousands, of files from studies that are interesting in their own right, but can't be linked together to maybe form a bigger picture. There are LOTS of labs in this position. Hence the interest in being able to link multiple iTRAQ/TMT studies together.

Problem is, this stuff is complicated. I've seen talks where people always use 1 channel as the same control or that have spiked in standards, but it seems like a good statistician can always punch holes in the observations that end up coming out -- even if the results look good. We are tossing around lots of variables and to link these things you need some high end statistics that, quite honestly, I wouldn't understand anyway.

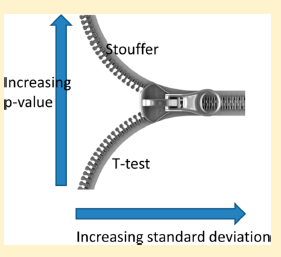

So, here we go again! This paper in this month's JPR from D. Pascovici et al., describes the use of the Stouffer z-score to combining results from multiple iTRAQ studies. The algorithm appears to be derived from robust statistics formerly used in meta-analysis of microarray data. The results look impressive and the algorithm is applied to two separate biological systems, one in plants and one in a rodent brain system. The plant system is really cool cause they actually get a visible phenotype from the plant.

Yes, I'm over my head. There is lots of math symbols in this paper that I do not recognize that hinder my ability to be critical here. But that's for people with different training. In the end if you show me (and some reviewers who are obviously more qualified) that your system gives you results that you can match to a phenotype, I'm gonna want to try it out. And I'm gonna tell lots of people about it.

I'd love to hear what you guys think of this!

Subscribe to:

Post Comments (Atom)

The datasets which they use lack a known truth. It's hard to know how well the method performs. It would have been better if a spike-in experiment was done to benchmark the performance of the method. There's another method for the same purpose which is more convincing.

ReplyDeleteThanks Ben for the interest in our paper - I'm glad somebody read it! We find this sort of approach handy for many datasets, as in our experience l it has fairly low FDR - though it's by no means the only approach we use. Over time we found that people in proteomics were unfamiliar with the concept of combining p-values, so explaining it became the main motivation for writing this up. I agree with Dario and it is a fair comment, in an ideal world the datasets used would have been some controlled ones such as spike-in experiments. As things happen, this paper became more of an off-shoot of a different project, and we liked the concept of having a real set with a gradation of effects, so wrote it up using it. Thanks both for looking at it.

ReplyDeleteDana Pascovici