Monday, July 31, 2017

Improving nanoLC with temperature controls!

At the top of the list of variables I never thought about manipulating, I'd like to present this new study at JPR!

To be perfectly honest, I only increase the temperature of my stationary phase for 2 reasons

1) To keep it higher than room temp, so that daily lab temperature fluctuations wouldn't have adverse effects on my reproducibility

2) To reduce my back pressure so I'm not worried about column fatigue and normal, inevitable back pressure increase leading to an error 15 minutes after I get home

However, I'm also fully aware of this paper and it's key points....

I've seen this numerous times in the field -- you can't get cutting edge proteomics without perfect chromatography -- and this paper suggest a number of improvements beyond anything I've seen before.

How 'bout this -- lower column temperture can improve the peak shape of modified peptides?

Or -- trapping at low temperature and cleaning your trap at higher temperature can massively reduce crossover contamination?!?

Or -- you can't keep the temperature too high or it will degrade your columns?!?

All stuff I really had never stopped to consider that are extensively evaluated and revealed in this nice paper.

Quick aside -- I stopped for a second to wonder who would be evaluating these conditions and checked on the authors. Evosep biosystems is a really interesting startup that is collaborating with the Max Planck institute and Denmark university systems to address the biggest challenges facing clinical proteomics.

They don't have any prodcuts yet -- just some really interesting videos on YouTube that demonstrate more innovative thinking like the stuff in this paper, but with a motto like this....

...I'm know I'm going to be keeping an eye on them!

Sunday, July 30, 2017

Fragmentation patterns in NATIVE top down proteomics!

Aaaand....in case you were just wondering how you might do NATIVE top down proteomics (what?!?), do I ever have the paper for you!

This paper is quite comprehensive and looks at the fragmentation of something like 150 proteoforms in a bunch of samples to figure out the answer to the questions I expect a lot of people in our field will be asking in they year 2029.

If you wanted to do a comprehensive top down proteome of some cells while leaving the proteins in their fully comformed native state, you may want to use different fragmentation scoring techniques, because they do fragment differently. I'm mostly leaving this here for myself to reference down the road, so I won't get into the data much.

To answer a question no one has asked yet, you need tomorrow's mass spectrometer. This team used a slightly modified high field quadrupole Orbitrap system

that they previously described here.

The minor modifications are that it is capable of MS3 -- can scan up to 20,000 m/z, has a couple ion funnels, and has a quad that can isolate extremely high mass ranges.

This is a great resource today if you're asking questions way more advanced than I am!

Saturday, July 29, 2017

Time to accelerate the search for missing human proteins!

We still have a "missing protein" issue. Questions like: Is this protein real? The genomics and transcriptomics thinks it is, but do we have mass spectra to back it up? Is there a good reason for why we don't? That kind of thing are still challenging.

This open paper provides great perspective on how we can use the diverse resources of our community to answer these questions!

It's not going to be easy -- but we have all sorts of tools and great scientists out there who may have already solved the question of "is this transcript expressed and where" we just need to bring it all together!

Friday, July 28, 2017

Tube gel digestion?

(Clicking should expand it!)

In-gel digestion appears to be making a comeback in the literature (it works so well, it's hard to imagine it ever going away, despite the technical challenges). There is even a spiffy new term for it that eludes me right now that I should add to the translator when I remember.

(BTW, I consider the biggest challenges of SDS-PAGE in gel digestion to be time, loading control, reproducibility, enhance sample handling leading to contamination, etc.,)

This recent paper suggest a tube-gel digestion protocol that looks like it minimizes a lot of these!

They spike UPS1 protein standards in controlled amounts into a yeast lysate background and compare in-solution, standard in-gel digestion, and their tube based protocol. Label free quan is performed on a quadrupole-Orbitrap and that data is processed in MaxQuant.

Not only do they minimize the sample handling, but they appear to retrieve more yeast protein identifications than the other two methods while consistently identifying more of the UPS1 proteins at the lower dilution spike-ins.

I really like this method because it doesn't take too much to imagine automating several of their steps.

All the RAW data is available at ProteomeXchange here (PXD003841)

In-gel digestion appears to be making a comeback in the literature (it works so well, it's hard to imagine it ever going away, despite the technical challenges). There is even a spiffy new term for it that eludes me right now that I should add to the translator when I remember.

(BTW, I consider the biggest challenges of SDS-PAGE in gel digestion to be time, loading control, reproducibility, enhance sample handling leading to contamination, etc.,)

This recent paper suggest a tube-gel digestion protocol that looks like it minimizes a lot of these!

They spike UPS1 protein standards in controlled amounts into a yeast lysate background and compare in-solution, standard in-gel digestion, and their tube based protocol. Label free quan is performed on a quadrupole-Orbitrap and that data is processed in MaxQuant.

Not only do they minimize the sample handling, but they appear to retrieve more yeast protein identifications than the other two methods while consistently identifying more of the UPS1 proteins at the lower dilution spike-ins.

I really like this method because it doesn't take too much to imagine automating several of their steps.

All the RAW data is available at ProteomeXchange here (PXD003841)

Thursday, July 27, 2017

pCLAP -- A new method for RNA-protein binding analysis

It seems like I'm hearing more about RNA-protein binding all the time. The genetics people have ways to do this from the nucleotide side. Maybe those are straight-forward, but I'll have to do a lot of homework to figure out what they are talking about.

If someone in your department has asked you about doing this stuff, you should check out this new paper at JPR!

From the mass spec side this looks really straight-forward. Even that data processing doesn't seem to require magic. It's all plus/minus analysis. Peptide disappears in this sample prepped this way, but is present in this other one -- must be RNA binding!

All of the work and power in the methodology is on the sample prep side, so hopefully you have someone great who does that for you! They validate the assay by using a human cancer cell line and identify about 1,000 RNA binding sites -- many previously characterized, and a bunch of new ones!

Wednesday, July 26, 2017

Membrane proteomics of breast cancer brain metastasis!

Did you know that there are specific breast cancer cell lines that are known to preferentially metastasize to the brain? I didn't! They have one called MDA-MB-231BR (please excuse typos) that has this terrifying behavior (they actually call it brain-seeking behavior...

...sorry...had to lighten this up some..)

They do membrane enrichment by ultracentrifugation at 150,000 x g and quantify proteins in this cell line and other cells from the same tissue that aren't known to have this trait -- AND they compare the quantitative membrane profile to a brain cancer cell line. The proteomics is single shot in triplicate using a standard "high/low" method on an LTQ Orbitrap Velos system. (They also spend some time optimizing their membrane prep procedure).

Data processing is done in PD 1.2 -- and quan is done with spectral counts in Scaffold.

The general idea is that there might be some sort of pattern at the cell membrane that is up-regulated in the brain seeking line, not present in the others, that would confirm in the brain cancer line.

Using their method, they find a series of potential candidates.

I downloaded this data from ProteomeXchange here (PXD005719). I reprocessed the data with SequestHT/Percolator/Uniprot-SwissProt and get very similar protein numbers to what they find.

This brings me around to why I was downloading their data anyway. I was curious to see how a membrane prep proteomics procedure compares in coverage to an ultradeep proteome such as the Bekker-Jensen et al., dataset.

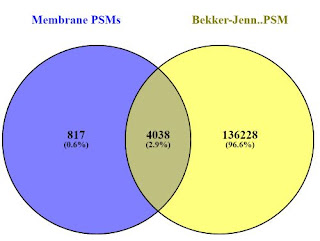

I pulled the breast cancer cell line from the Bekker-Jensen repository and processed it side by side with the MB-231-R RAW files from this study. There is some apple-to-oranges going on here, vastly different chromatography, different instruments, etc., but a comparison at the protein level is pretty interesting (at least to me). The overlap at the protein group level (thanks Venny!) looks something like this:

And if we compare the PSMs (P.S. Chrome does NOT like when you load 390,000 peptide sequences into it. It kept throwing me popup errors, but eventually completed. Also worth noting, Venny removes duplicates so the PSMs actually match the peptide group counts.

Honestly, I was a little surprised and checked this again. Considering the absolutely amazing depth achieved in the Bekker-Jensen experiments, I did not expect that 10% of the proteins identified by Peng et al., to be novel to their set. Sure, it's less than 80 proteins, but it appears to be at least a couple of the ones downstream analysis tells them are interesting.

This obviously isn't a perfect experiment. Honestly, this is pretty bad science. It's completely possible that the 76 proteins found in the MD-231-R aren't even expressed in the cell line used in the other study. I could eliminate factors like parsimony and grouping errors with a better experimental design on my side, and may still do that (Bekker-Jensen cell lines take 8 hours on my PC -- without LFQ...so I'm not in huge rush...) I'm putting this here because I came into this little experiment with a preconceived expectation of the outcome, that perhaps if the proteomic analysis was deep enough that membrane enrichment isn't as necessary as we once thought it was. I'm going to leave this lazy analysis here because it seems to suggest the opposite.

Oh -- and this is a good paper with an interesting experimental design you should check out!

(Dorte, if you happen to see this, sorry about the extra "n"s all over the place.)

Tuesday, July 25, 2017

Dinosaur protein sequencing!

I swear, I would absolutely credit the person who photoshopped this together, but Google Images doesn't know who this mastermind is, just that the original image was on Tumblr here.

Credit for finding the paper I'm about to ramble about goes to ProteomeExchange, where I was just sleepily browsing for some new RAW files to torture my PC with -- and BOOM! Awesome paper with an okay title.

Improved title suggestion: WE TOTALLY SEQUENCED SOME DINOSAUR PROTEIN WITH AN FTICR! DOWNLOAD THE DATA, WE AREN'T MESSING AROUND!

First off, this isn't novel. Previous studies by some of these authors and others have been seen before. The one that first comes to my mind is the 2009 Science Study. However, this was met with a pretty serious degree of controversy. (Side note: I consulted on a potential dinosaur protein sequencing project maybe 5-6 years ago that looked super positive, till I BLAST'ed all my de novo results. Getting clean and pure samples this old is REALLY hard)

So. Why this paper? Because it absolutely supports the 2009 study! The protein removal process was streamlined and improved to minimize handling (the Sample handling and anticontamination procedure section of the Materials and Methods is intimidating). Even better, the improvements in the available sequences to compare this with -- and the improvements in proteomics algorithms makes this all more valuable. Even finding one new peptide sequence can improve our understanding of dinosaur phylogeny -- and they find a whole lot more than one!

It has got to be awesome to go through so much work -- where you've got to have some doubt in your mind if something is gonna be there -- this is a 60 million year old rock -- and then...

BOOM! Clean blanks and Dinosaur Protein!!

Its 8 o'clock! Time to wrap this up. They use a 12 Tesla LTQ-FTICR and employ both PEAKS and Byonic to sequence the results. The search tolerances are kept extremely tight and not one peptide is reported with an MS1 discrepancy over 4.5ppm. In fact, only 5 fragments are reported in the whole study with an MS/MS tolerance over 20ppm.

They use the collagen sequences in a variety of ways (comparing to known modern and prehistoric collagen sequences) to improve our knowledge of the phylogenic trees and evolutionary placement of this and other dinosaurs.

Monday, July 24, 2017

Detecting protein variants -- a great current how-to guide!

As the sample depth of proteomic analysis continues to improve thanks to better/fast mass specs, better sample prep and more sophisticated separation, detecting protein variants is becoming a lot easier. "-er" is an important addition to this word -- cause is sure hasn't got to the "easy" point.

This is a really good "How to" guide that talks about strategies and common pitfalls!

They do a lot of meta-analysis of one of my favorite datasets -- the NCI-60 deep proteomes (Orbitrap Elite, high/high dataset with 24 SCX fractions for each cell line), though they apply these protocols ot the "shallow" proteomes as well (Orbi XL high/low with 12 fractions) that provides a great perspective for mining repositories!

Sunday, July 23, 2017

Quantitative assessment of digestion techniques for protein-protein interactions!

This new study ASAP at JPR is an incredibly thorough (QUANTITATIVE!) analysis of different pull down and digestion techniques for Protein-Protein Interaction (PPI) experiments.

Honestly, I'm filing it away as a reference for the next time I try to do one of these. This thing is a textbook of how to optimize a PPI experiment.

Of course, this isn't the first one of these we've seen, but rather than doing PSM counts or even # of peptide/proteins ID'ed, this study does LFQ with MaxQuant (quadrupole Orbitrap) and then use stable isotope SRM quantification.

An interesting observation is that higher abundance proteins are easily quantifiable just about regardless of the pull-down and digestion methods. To get to the lower abundance things, they really need to fine tune the methodology.

Saturday, July 22, 2017

How does Proteome Discoverer maintenance work?

I've gotten a lot of questions about Proteome Discoverer Maintenance and how it works. I wanted to put this post together so I can reference it when the next question comes in.

Proteome Discoverer 1.4/2.0/2.1/2.2 all appear to reference a shared maintenance key. If you get a quote from your vendor here in MD, the maintenance key says "Proteome Discoverer 1.4 maintenance." I'm not sure why, but I'm going to assume it is hard to change the product description. Either that, or my local rep does it wrong....maybe explaining the questions....

If you go into any version of Proteome Discoverer you should be able to add that maintenance key.

Admin/Manage licenses. Should look something like this:

Here you can add the activation code. Then you should be able to upgrade to any version of Proteome Discoverer following something like these instructions.

If your maintenance is expired (you may have to click "show expired licenses") then you won't be able to upgrade. You will be able to try out the demo if the demo has never been installed on that PC, though!

Friday, July 21, 2017

Proteomics of white button mushrooms post-harvest

I honestly started reading this paper

because I was 12% awake and the main question I had was something like...

If I'd stopped at the abstract, I'd have known that this group is interested in finding biomarkers that will enable them to select for mushrooms that have enhanced shelf life! As my espresso is being absorbed, this seems like a better idea. I imagine as someone is selectively breeding mushrooms they are probably first focusing on obvious phenotypes like size and shape, but how long that mushroom will last on the shelf might be a lot more problematic to test for at the farm.

Off topic: Did you know that portabello and white button mushrooms are all the same species? Just different stages in the maturation process? 5.1 million fungi species on this planet, and in my country we essentially eat just one of them...maybe this is where I should put the Catbug picture I used above....

Back to the paper! They get a bunch of mushrooms post-harvest and drop them into liquid nitrogen and manually grind the tissue into powder and extract the proteins/peptides in an undisclosed manner. The tryptic peptides are labeled with 4-plex isobaric tags and an online 2D-LC/MS method is used following a protocol in a paper that is not open access. Data is processed with Protein Pilot and Mascot using manufacturer settings.

In the end they obtain 5,878 peptides that correspond to around 1,000 proteins. Over 250 proteins change post-harvest across the datasets. Surprisingly, about 100 proteins are up-regulated! Naively, I assumed that everything would be degradation post-cell death.

The authors pick a few proteins that are shared between the multiple time points and about half of them appear to match via RT-PCR.

Thursday, July 20, 2017

How to do quantification of reporter ion quan replicates in PD 2.2

Thank, Dr. K for this first great PD 2.2 question that Dr. P didn't cover in his/her videos!

Here is the question: If you have an experiment like this reporter ion one above where your TMT-10plex set has replicates within it's set, how do you set that up so that PD 2.2 knows these are replicates and will allow you do get p-Values and use Volcano plots?

First off, you're going to need 2 study factors -- one for your samples and one for your replicates. Something like this will work for the example above

Here I've set up 5 conditions for my TMT-10plex control runs I did with a friend in Boston a few years ago. This is human cell digest added in a ratio of 1:2:4:8 with 2 replicates of each (and 4 1:1).

Next, I'll have to set up the Samples tab so they know which is which. Something like this

I just called the replicates 1 and 2 in order for simplicity sake.

Now, when I go to the Grouping and Quantification tab, when I select my conditions, this "Nested Design" wording pops up and my samples look like this:

DISCLAIMER: (Maybe this should be at the top) I don't know if this is how you do this. There is probably a better way. In this example it looks like the ratio of B1/A1 is obtained and then compared to the B2/A2. Works for me for this example, but please only take this as a way to get started!

What do the results look like? Spot on!

I'll have to look at the RAW file again, cause it looks like A and E are the 1:1 (and we had a bit of variance due to our pipette accuracy at 1uL.) but the other channels look right and E/A looks about 1:1

Now that we have replicates then we're allowed by PD to create Volcano plots to find what is statistically and quantitatively interesting. They should look like this (from a Compound Discoverer set we're working on):

However, if ALL your peptides are 1:1 or 1:2...that doesn't work very well...the 1:1 sample looks like this:

(...everything is at/around 1...I made this even messier by just processing peptides in a very narrow m/z range so that I could do several iterations before I had to go to work)

Follow-up question: Where are the p-Values coming from in the Volcano plot?

They are the -Log10 (unadjusted) Abundance ratio p-Values for the peptide/protein.

By default template you probably only see the Adjusted p-Values. You can unhide this value by clicking the Field Chooser (1) and then checking (2) above.

If you've also forgotten all of high school (Logs?), the way to convert the Abundance Ratio P-Value in the sample output to the one in the Volcano plot in Excel is something like "=ABS(LOG10(value))". Took me a couple tries to get it right and the numbers match.

Wednesday, July 19, 2017

Proteome Discoverer 2.2 is now available on the Thermo Omics Portal!

An amazing scientist at the NIH contacted me and told me that Proteome Discoverer 2.2 is live on the Thermo Omics portal! (Thank you Dr. J!)

Demo versions are available, as well as an upgrade key that will work if your copy of Proteome Discoverer has valid maintenance.

The instructions to upgrade to PD 2.2 are about the same as the instructions I posted on how to upgrade to PD 2.0 here a while back.

Once you get upgraded you'll find that PD 2.2 is VERY similar to PD 2.1, just with some awesome new features. For the biggest changes, you'll find some videos over there --->

that may be useful (Thank you Dr. P!)

Tuesday, July 18, 2017

Amazing quantitative coverage of the RBC proteome!

I gotta run, but I want to leave this great paper ASAP at JPR here.

This is the second paper I've posted this year on the RBC proteome. The first paper (post here) suggested that we have been taking our knowledge of this "simple" cell for granted and there is more to discover. This new paper definitely supports this assertion!

They start with RBCs from 4 individuals and digest them with a modified med-FASP (multiple enzyme) methodology. They do something really cool here, lysing part of the RBC population forming "white ghost" cells (which appear to just be empty membranes) and digesting them /running them separately. This approach reveals a more comprehensive RBC proteome than we've seen before as well as some new information.

They show clear evidence of some membrane transporters that have not previously been seen in RBCs and show that RBCs contain over 2,500 distinct proteins. I expected this study to use the proteomics ruler but the reference for the math leads me to this paper on the Total Protein Approach (which looks awesome! but I have to go do work rather than spend time on it). Using this they can get a really clear number of the # proteins per cell. About 1,800 of the ones they identify are present in the RBCs at >100 copies per cell -- meaning that there are bunch here at less than 100 copies per cell -- and they have the sensitivity to identify and quantify them. Not the focus of the paper, but something I'm still amazed to see.

Some of their global observations don't jive with our historical understanding of protein abundance in RBCs -- so they order stable isotope labeled peptides and show that they are right. Global proteomics "relative quan", FTW!

Monday, July 17, 2017

Changes in protein turnover in aging nematodes!

(C. elegans image borrowed from Genie Research)

This morning I learned that protein turnover slows down as most eukaryotic organisms age, which sounds like a dumb idea to me.

Fortunately, this great team in Belgium already knew about this and set up a really elegant C. elegans system to study this event and what is controlling it!

The method is really cool. They feed E.coli 15N or 14N containing NH4Cl. Then they put synchronized nematodes on plates of the labeled E.coli. They can have the worms eating labeled bacteria for however long they want and then move the worms to unlabeled bacteria. When they extract the proteins/peptides from the worms they can assess the protein turnover levels by comparing time vs heavy/light N. I don't know about you, but I'm impressed!

Not only can they assess overall protein turnover speed, but they can assess protein turnover speed of individual proteins. They pull a total of 54 samples and do 3 biological replicates for their downstream stats. The peptides were analyzed on an LTQ Orbitrap and peptide identifications were obtained with a pipeline that includes MS-GF+.

Even more cools stuff -- they have an R package that they developed that can do all the 15N/14N computations! You can get that here.

How'd they do?

The could accurately track turnover in nearly 900 peptides throughout all these samples that correspond to about 600 proteins. This gives them a really good picture of different cellular compartments and proteins of different molecular function.

In interpreting this data, the paper gets even better! Even in an organism this simple, turnover isn't just slowing down uniformly. It is a mixed bag. A few proteins even increase in turnover. They draw some really thought-provoking biological interpretations regarding the systems protecting eukaryotic cells from proteome collapse that is better to leave to this great open paper.

Clever system, great free software for the community, AND awesome biology? If you need an inspirational paper to start your week off right, I highly recommend this one!

Sunday, July 16, 2017

Fascinating GWAS proteomics(?) study.

This post is going to be a bit of a Sunday morning ramble. It began when this interesting paper showed up in my Twitter feed a couple times today.

And it caught my attention because of the associated text in the retweets:

GWAS is Genome Wide Association Study (wikipedia here). Generally, they proceed in this way: hundreds or thousands of people are tested with SNP arrays that can detect literally millions of different genetic variants. The participants are divided by their phenotype or disease state and inferences are made between the signals of variants between the phenotypes.

Lots of good stuff has come from GWAS, and lots still will as the tools continue to improve. If all goes well you will identify an Expression Quantitative Trait Loci (eQTL) or two that is associated with your disease. GWAS via SNP doesn't identify a gene that is associated with your disease. It identifies an area in the genome that is associated with your disease. In the best case scenario, you are working with a really well annotated genome and a gene with really well understood mechanisms of expression.

Side note: As of this Nature article in 2011, 96% of the 1.7 MILLION samples in the global GWAS catalog were from people of European descent. In this Nature followup last year, this appears to have approved, but there shockingly large discrepancies (that same library now has 35 MILLION samples). These articles point out the problems with developing genetic medicines for only certain populations. However, if you are really bored (or interested), check out this paper and the concept of linkage disequilibrium. Genomes aren't static. They can't be or that evolution thing doesn't work very well. You may not be able to make an inference from the effect of one point on a genome from one population to the next, cause that gene might be different or somewhere else entirely.

Wow. What was I writing about? Oh yeah! Okay, so GWAS is powerful, but we're inferring a lot of stuff 1) that place that looks upregulated is linked to that gene 2) it is linked to that gene in a way we understand (upregulation of that area could cause regulation of the associated gene to go UP or Down)

If you're still reading along (sorry) you can see why I might do a double-take on a GWAS proteomics study. You might also understand why I might read a paper and be a little surprised that no direct protein measurements were ever performed in this study.

This paper introduced me to the concept of pQTLs. These are QTLs associated with protein levels. 71 proteins known to be associated with cardiovascular disease were integrated as factors here.

My interpretation (which is likely wrong) is that rather than saying cohort A vs cohort B, the factors compared were patient group A who had CRP levels above X.X mg/dL compared to patients with CRP below that level. Then you look for QTLs that stand out.

How did they fare? Pretty well. They make some interesting biological conclusions and correspond those to what characteristics that patients manifest. They come up with 20 or so observations where the GWAS predicts the proteins that they know are elevated from the patient files. They find some other QTLs that seem to be associated with the known elevated proteins that might make for better predictive models of different stages of cardiovascular diseases down the road. Some enterprising CVD researcher should pull out this list and see if they do correspond at the protein level.

Is it really a proteomics study? Well...it's more of a transcriptomics study with some integration to a small set of proteins, but it is interesting and it forced me to read 2 or 3 papers to get to this (likely incorrect) interpretation of what they set out to do -- and whether it worked.

Got some guy doing GWAS down the hall and wondering if you could work together? Maybe you should check out this paper!

Incidentally, last summer a great study came out of some lab at Harvard where QTL and protein quantification were systematically compared using an amazing mouse model system. I wrote a post on it here that certainly didn't do it justice, but the original paper is seriously good and helps bridge some gaps -- including terminology and can give you a feel for when you can trust QTL measurements and when you can't.

And it caught my attention because of the associated text in the retweets:

GWAS is Genome Wide Association Study (wikipedia here). Generally, they proceed in this way: hundreds or thousands of people are tested with SNP arrays that can detect literally millions of different genetic variants. The participants are divided by their phenotype or disease state and inferences are made between the signals of variants between the phenotypes.

Lots of good stuff has come from GWAS, and lots still will as the tools continue to improve. If all goes well you will identify an Expression Quantitative Trait Loci (eQTL) or two that is associated with your disease. GWAS via SNP doesn't identify a gene that is associated with your disease. It identifies an area in the genome that is associated with your disease. In the best case scenario, you are working with a really well annotated genome and a gene with really well understood mechanisms of expression.

Side note: As of this Nature article in 2011, 96% of the 1.7 MILLION samples in the global GWAS catalog were from people of European descent. In this Nature followup last year, this appears to have approved, but there shockingly large discrepancies (that same library now has 35 MILLION samples). These articles point out the problems with developing genetic medicines for only certain populations. However, if you are really bored (or interested), check out this paper and the concept of linkage disequilibrium. Genomes aren't static. They can't be or that evolution thing doesn't work very well. You may not be able to make an inference from the effect of one point on a genome from one population to the next, cause that gene might be different or somewhere else entirely.

Wow. What was I writing about? Oh yeah! Okay, so GWAS is powerful, but we're inferring a lot of stuff 1) that place that looks upregulated is linked to that gene 2) it is linked to that gene in a way we understand (upregulation of that area could cause regulation of the associated gene to go UP or Down)

If you're still reading along (sorry) you can see why I might do a double-take on a GWAS proteomics study. You might also understand why I might read a paper and be a little surprised that no direct protein measurements were ever performed in this study.

This paper introduced me to the concept of pQTLs. These are QTLs associated with protein levels. 71 proteins known to be associated with cardiovascular disease were integrated as factors here.

My interpretation (which is likely wrong) is that rather than saying cohort A vs cohort B, the factors compared were patient group A who had CRP levels above X.X mg/dL compared to patients with CRP below that level. Then you look for QTLs that stand out.

How did they fare? Pretty well. They make some interesting biological conclusions and correspond those to what characteristics that patients manifest. They come up with 20 or so observations where the GWAS predicts the proteins that they know are elevated from the patient files. They find some other QTLs that seem to be associated with the known elevated proteins that might make for better predictive models of different stages of cardiovascular diseases down the road. Some enterprising CVD researcher should pull out this list and see if they do correspond at the protein level.

Is it really a proteomics study? Well...it's more of a transcriptomics study with some integration to a small set of proteins, but it is interesting and it forced me to read 2 or 3 papers to get to this (likely incorrect) interpretation of what they set out to do -- and whether it worked.

Got some guy doing GWAS down the hall and wondering if you could work together? Maybe you should check out this paper!

Incidentally, last summer a great study came out of some lab at Harvard where QTL and protein quantification were systematically compared using an amazing mouse model system. I wrote a post on it here that certainly didn't do it justice, but the original paper is seriously good and helps bridge some gaps -- including terminology and can give you a feel for when you can trust QTL measurements and when you can't.

Saturday, July 15, 2017

Picky -- take all the work out of targeted human proteomics!

Targeted proteomics is cool and all, but don't you hate entering ion mass and retention time into those stupid windows? It is even worse if you're using one of those quadrupole things and you have to load up fragment ions and optimized collision energies...ugh...

What if there was an easy web interface that would just make the method for you for any human protein you select?

Say hello to Picky, which you can read about here!

It makes use of the Proteome Tools database (synthetic peptides for ALL human proteins!) and knowledge of retention time and instrument parameters and completely simplifies the worst part of the targeted proteomics process

Heck, why don't you just give it a shot? You can check out the awesome Picky user interface here!

Friday, July 14, 2017

UHPLC-LCMS lipidomics of dog plasma can differentiate breeds!

I admit it. I used this awesome new paper in Springer Metabolomics to justify Google Image searching for pictures of 9 different dog breeds in funny hats and used my favorites to illustrate a figure from the paper. You caught me. However, I also think that this is a really interesting study with results I would not have guessed possible.

First off, I'm no lipidomics expert. I did spend a couple days at Pitt's center for Environmental and Occupational Health which is one of the premier lipidomics centers in the world. What I got out of that visit, however, was mostly that lipidomics is really really hard and I'm glad they were doing it and not me.

The conclusion of the study I'm talking about now is in the title of the post and the paper. I would never have imagined that during our occasionally demented differentiation of wild dogs into this diverse set of physical features that we would also alter their plasma lipidomes, but it looks like we did!

They took plasma from around 100 dogs. n > 9 in all cases. These weren't lab controlled dogs, they were plasma samples from healthy dogs that selflessly volunteered some plasma for science, then went home to their different houses, probably put on funny hats and ate different food. There are a TON of variables here.

The lipids were extracted from the plasma in a very straight-forward manner and separated on a C-18 column and MS1 spectra were acquired at 100,000 resolution on a benchtop Orbitrap (appears to be Exactive "classic" system). Post-processing, lipids of interest were validated by MS/MS via direct infusion using a NanoMate coupled FT-ICR (LTQ-FT Ultra).

All the data was processed in XCMS and FIEmspro (new to me) -- and, you know what? The breeds are distinct. The paper is Open Access. If you're curious, you should totally check out the PCA plots! The breeds are totally distinct!

From the global profiles they are able to hunt down the features/compounds that are driving breed clustering. This is another reason to check out this awesome paper -- in case you're like me -- and don't know how to make that step from prime components to biomarker. I'm still unclear after reading this, but I think this is a cognitive deficiency on my part, because this is a very clear and well-written paper. They provide some great references that I hope will help me bridge this gap eventually.

On the topic of references in this paper -- I spent some time on this open access one as well.

I'm just linking it in case anyone else wants to know what breeds have the highest cholesterol levels and how those vary throughout a dog's life (the n is only high for a few breeds/genders/age groups, but still interesting to me).

First off, I'm no lipidomics expert. I did spend a couple days at Pitt's center for Environmental and Occupational Health which is one of the premier lipidomics centers in the world. What I got out of that visit, however, was mostly that lipidomics is really really hard and I'm glad they were doing it and not me.

The conclusion of the study I'm talking about now is in the title of the post and the paper. I would never have imagined that during our occasionally demented differentiation of wild dogs into this diverse set of physical features that we would also alter their plasma lipidomes, but it looks like we did!

They took plasma from around 100 dogs. n > 9 in all cases. These weren't lab controlled dogs, they were plasma samples from healthy dogs that selflessly volunteered some plasma for science, then went home to their different houses, probably put on funny hats and ate different food. There are a TON of variables here.

The lipids were extracted from the plasma in a very straight-forward manner and separated on a C-18 column and MS1 spectra were acquired at 100,000 resolution on a benchtop Orbitrap (appears to be Exactive "classic" system). Post-processing, lipids of interest were validated by MS/MS via direct infusion using a NanoMate coupled FT-ICR (LTQ-FT Ultra).

All the data was processed in XCMS and FIEmspro (new to me) -- and, you know what? The breeds are distinct. The paper is Open Access. If you're curious, you should totally check out the PCA plots! The breeds are totally distinct!

From the global profiles they are able to hunt down the features/compounds that are driving breed clustering. This is another reason to check out this awesome paper -- in case you're like me -- and don't know how to make that step from prime components to biomarker. I'm still unclear after reading this, but I think this is a cognitive deficiency on my part, because this is a very clear and well-written paper. They provide some great references that I hope will help me bridge this gap eventually.

On the topic of references in this paper -- I spent some time on this open access one as well.

I'm just linking it in case anyone else wants to know what breeds have the highest cholesterol levels and how those vary throughout a dog's life (the n is only high for a few breeds/genders/age groups, but still interesting to me).

Subscribe to:

Comments (Atom)